Quantrium Flux

When Google “blew up like a brook trout."

Artificial Intelligence is pretty amazing. Armed with the world’s knowledge right at its imaginary fingertips, it is capable of doing and seeing anything. Still, it has its blind spots prone to hubris and errors in judgment. (AI jargon calls it hallucinations). And when that ends up being funny, technology gets to show its glitchy wit. (Is there an AI jargon for this?) One such moment was the recent Google AI Overview outing, where it carried its “Search Me” attitude too far with its hilariously invented idioms. It all started with internet users feeding Google with grammar phrases, made-up terms, faux idioms, and random nonsense, asking for their “meaning.” It didn’t take long before social media platforms like X and Threads were flooded with users sharing their experiences of searching for “meaning” and receiving surprisingly detailed and often amusing AI-generated explanations in response. All convincingly, believably far from the truth!

The proof of the pudding is in the eating

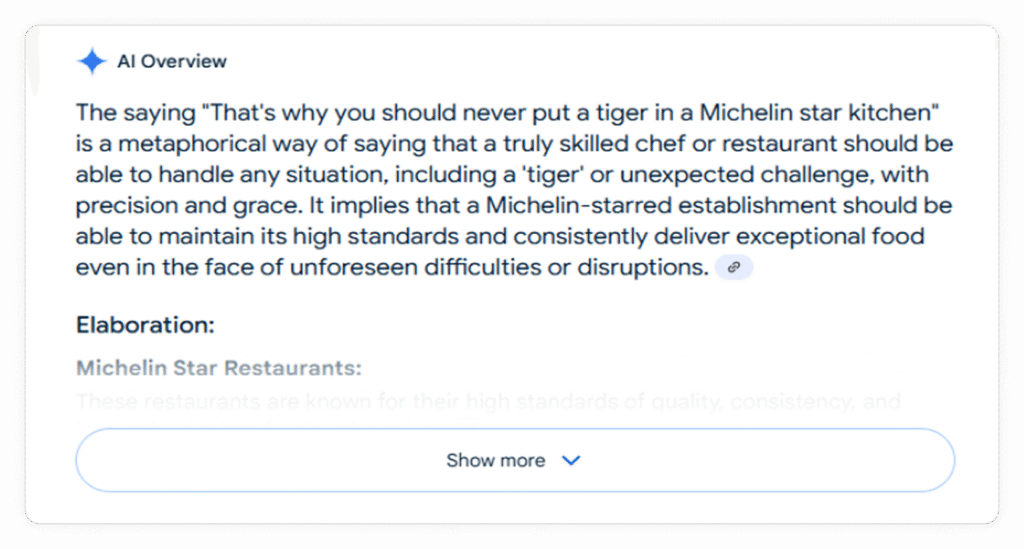

Here’s what one User shared.

“Never put a tiger in a Michelin star kitchen,” meant – if you can’t handle the heat, get out of the kitchen. Guess Google’s response?”

(Image Courtesy- Google/Alex Hughes)

Two buses going in the wrong direction is better than one going the right way.

Google’s interpretation

“A metaphorical way of expressing the value of having a supportive environment or a team that pushes you forward, even if their goals or values aren’t aligned with your own.”

What it actually meant

A metaphorical saying that suggests the value of exploring multiple incorrect paths to gain knowledge over the singular certainty of a single “right” path that may not offer as much learning or may be misleading.

Wine poured from the sky won’t make you fly.

Google’s interpretation

A playful way of saying that something like wine is not a solution to a problem or a means to achieve a desired outcome, such as being able to fly. It suggests that what’s offered, even if seemingly abundant or desirable, won’t deliver on the promised result.

What it actually meant

A poetic way of saying that miraculous or unrealistic events don’t grant impossible abilities.

There were other hilarious ones.

“Don’t give me homemade ketchup and tell me it’s the good stuff,” got the response, “AI Overview is not available for this search.”

A user concocted the phrase, “You can’t shake hands with an old bear,” and got a response that suggested that the “old bear” is an untrustworthy person. And nonsensical sayings like “You can’t lick a badger twice” received plausible interpretations.

To put it in AI Overview style- It was truly a case of blowing up like a brook trout! ( AI Overview interpreted this made-up nonsense with its assured confidence as a “colloquial way of saying something exploded or became a sensation quickly.”)

So, what do you think was Google’s response to this, Ahem! Confident Nonsense?

“At the scale of the web, with billions of queries coming in every day, there are bound to be some oddities and errors. We’ll keep improving when and how we show AI Overviews and strengthening our protections, including for edge cases, and we’re very grateful for the ongoing feedback…”

Google found that fake definitions revealed not only inaccuracies but also the confident errors of large language models – insights that helped them decide more precisely when AI Overviews should be displayed or avoided. The entire exercise was interesting for more reasons than comic relief. It showed how large language models strained to provide an answer that sounded correct, but wasn’t correct.

As an expert encapsulated it, “they were not trained to verify the truth. They were trained to complete the sentence.”

Outside of Google, this would qualify as taking something “without a pinch of salt!”

Hallucination

AI hallucinates because of limitations in its training data and architecture, which causes it to generate false or misleading information that appears plausible but lacks factual accuracy. This happens when models are trained by drawing from patterns and potential inaccuracies within their vast training data and reflects an inability to distinguish truth from falsehood.

Related Articles

Quantrium Flux

You've Got Blackmail

Take a Quantrium Leap

Take a Quantrium Leap and stay ahead and informed with the latest insights and strategies to navigate the evolving AI landscape. Reach us at info@quantrium.ai to start your journey.